- Key details

Accessing multiple data sources with data scraping.

- Client

The client is a non-commercial organization that provides support for African American small businesses and entrepreneurs. They’re proud to provide services that help African American businessmen get grants and achieve success in competitions.

- Challenge

Fast and accurate data scraping from multiple sources

The client deals with huge amounts of data coming from various sources regularly. So data management has become a concern for them.

They wanted to scrape job offers, mentorship and network opportunities for talented African American entrepreneurs from various websites and publish it on their own platform. So, entrepreneurs can easily discover businesses owned by African Americans and go support them or locate their own.

Nobel Link was challenged to develop a strong data scraping solution for the client’s marketplace.

- Solution

Best practices for robust & resilient web scraping

Our team of engineers have applied their data scraping expertise to enable effective data collection from various sources.

The Nobel Link team was to setup infrastructure & code flow for the client:

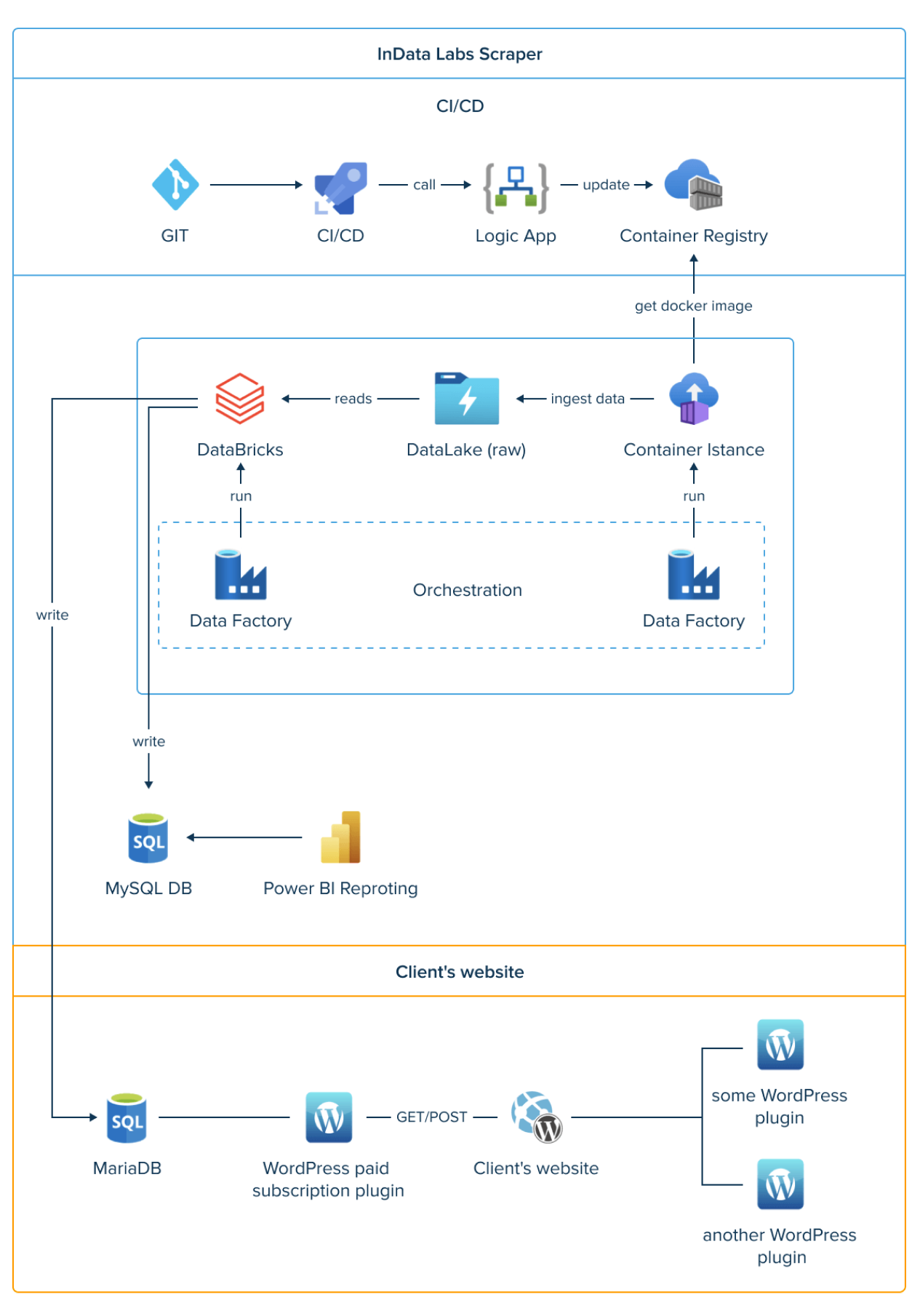

1. Git and CI/CD part

For code management there was used AzureDevOps repository with such a pipeline setup that allowed our team to build and push docker images to the registry by using a parallel job agent.

2. Registry and Logic App part

Next, we created Azure Docker Container Registry on Azure portal to store our docker images. Then we needed to create docker instances from images by using Azure Logic app to run scraper code in parallel and separately.

3. Scraper part

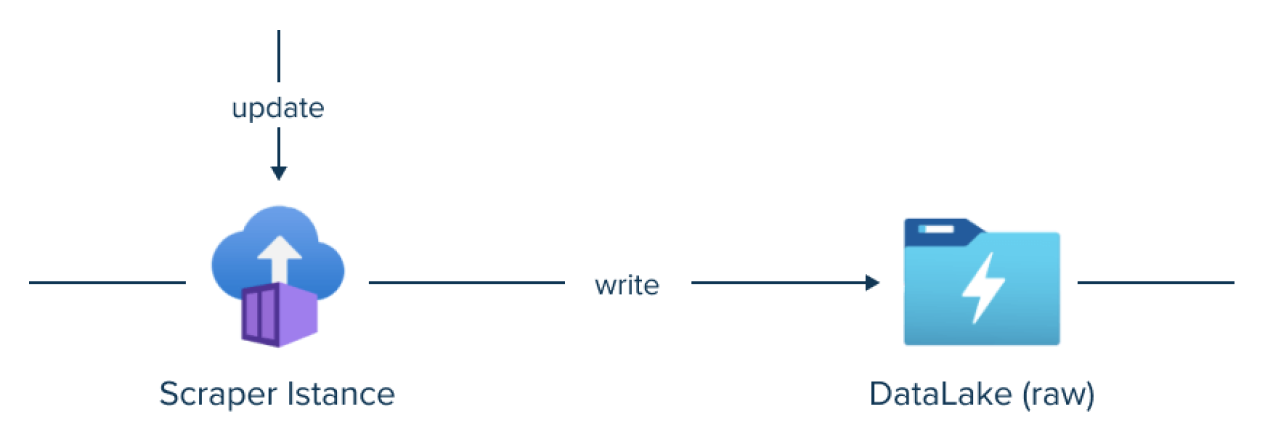

During this stage, the Nobel Link team created container instances with Logic apps. Then we needed to give each container access to Azure resources and sensitive data such as passwords, connection strings etc. that were stored in Azure KeyVault.

To store scraper outputs, our team decided to create a Storage Account which would be like a cloud folder to save scraped data. After that we were able to start our scrapers in a manual way, but we needed some orchestration, automatisation and postprocessing.

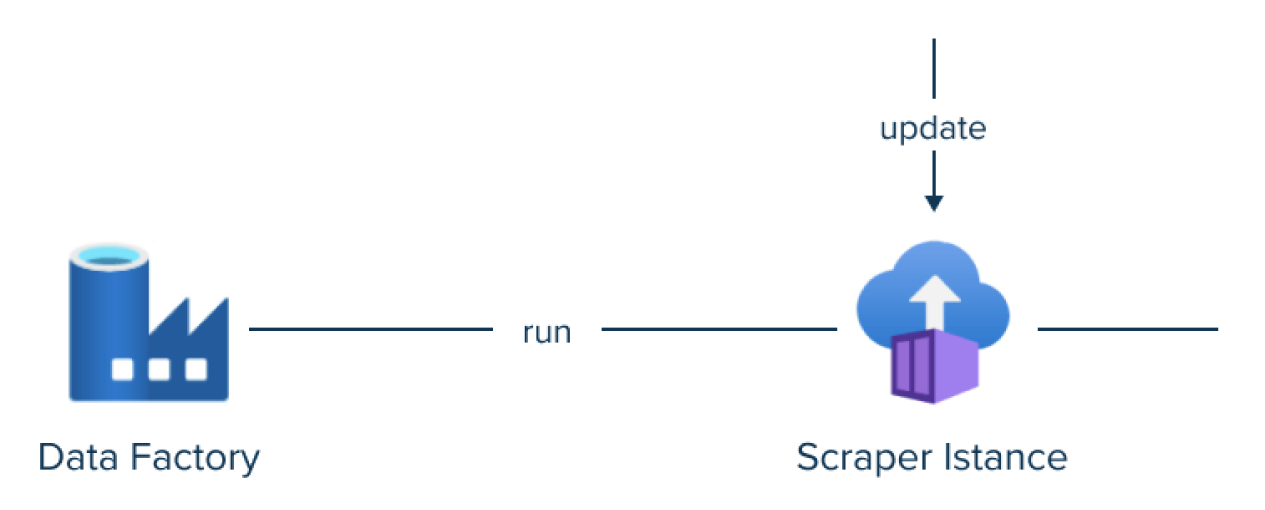

4. Data Factory and orchestration part

Our engineers ran all our scrapers with time-trigger and in a single pipeline run with Azure Data Factory. The main pipeline was supposed to start all containers with requests via azure API, then run DataBricks Notebooks to process collected data.

5. Data Bricks

At this stage, we were scrapping all the data from websites (as incremental loading of data from websites is not possible or difficult) and full data processing/saving to the database. Before loading new data to the database, we deleted the existing data.

As a result, the client has got a robust data scraping solution that scrapes data from multiple sites and business listings and gathers information about businesses’ founded by African Americans that are useful for the subscribers of the client’s platform.

- Result

Data scraping optimization for reduced processing time

Our team of data scientists and engineers drew upon multiple sources to meet the client’s data scraping needs. Our solution has empowered the client in the following ways:

- Data extraction at scale

- Structured data delivered

- Low-maintenance and speed

- Easy to implement

- Automation.

Tag

- Contact us